NotionCommotion

Members-

Posts

2,446 -

Joined

-

Last visited

-

Days Won

10

NotionCommotion last won the day on September 22 2021

NotionCommotion had the most liked content!

Contact Methods

-

Website URL

http://

Profile Information

-

Gender

Not Telling

Recent Profile Visitors

The recent visitors block is disabled and is not being shown to other users.

NotionCommotion's Achievements

-

Current search functionality strategies

NotionCommotion replied to NotionCommotion's topic in PHP Coding Help

Definitely, metadata will be searched and "maybe" content will be searched for some document types, but not sure. Per your link, "Solr is the popular, blazing-fast, open source enterprise search platform built on Apache Lucene", and it appears that Apache Lucene is "just?" a Java library used for the full text search of documents. If only full text searching, think it is necessary or just use PostgreSQL's? Also, not sure yet whether my referenced textai is much more, and really haven't spent much time learning about full text searching. Trying go understand how the app database and searching using a dedicated server work together. I't my understanding that I send stuff I wish to later search for to Solr/Lucerne/TextAi which in turn indexes it so it later be searched for. Seems like the same metadata would then be both in my SQL DB as well as the search engine DB which seems wierd. -

I am working on a SQL backed document management API where often the documents will reside in one of several categories (i.e. subject, type, project which created it, etc). I am looking into different ways to implement search functionality such as... Standard WHERE with LIKE and maybe regex. Full-Text queries (I happen to be using PostgreSQL) 3rd party Semantic search libraries such as https://github.com/neuml/txtai 3rd party machine learning libraries such as https://php-ml.readthedocs.io/en/latest/ API calls to some 3rd party webservice. Other? Is there any approach which is best for most applications? Or as I expect is the "best" approach based on the actual application requirements, and if so, can you please share your decision criteria?

-

Thanks, exactly what I was hoping for! Tried without the "from" but obviously didn't do what I wished for.

-

The following works, but seems like it has one more loop than it should. Better way to do so? I could get rid of the generator and merge to an array, but the generator seems like a better solution. Thanks private function doSomething(File $file) { foreach($this->getTextFiles($file) as $textFile) { // ... } } private function getTextFiles(File $file):\Generator { foreach($file->getChildren() as $child) { if($child instanceof IsTextFile) { yield $child; } else { foreach($this->getTextFiles($child) as $grandChild) { yield $grandChild; } } } }

-

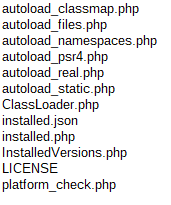

This is probably a stupid question. I wish to get a collection of classes which exist in a given directory, and found this post which directed me to this class, but it is depreciated and appears that this class should now be used. Great, I have Composer, and all I need to do is call Composer\Autoload\ClassMapGenerator::createMap($pathToDirectory)! Or do I? The only files included in vendor/composer are below. autoload_classmap.php autoload_files.php autoload_namespaces.php autoload_psr4.php autoload_real.php autoload_static.php ClassLoader.php installed.json installed.php InstalledVersions.php LICENSE platform_check.php I expect this is due to how I installed composer globally. Should composer classes ever be used in an application other than for dependency management? Or should I just create my own class for this need?

-

Thanks kicken, Was just trying to get out of the measly two unlink statements, and will stop trying.

-

I didn't think I was quite reinventing tempnam(). tempnam() doesn't delete the file when complete, correct? The example in the doc shows unlinking($tmpfname). However, if calling stream_get_meta_data() deletes the file and I just create it back, and the PHP no longer thinks it is responsible to delete it, suppose I am reinventing.

-

I will be using exec() to execute an external program which requires a file output path and will create a file located at that path, and the file is only needed during the execution of the PHP script and should be deleted after the script ends. Good or bad idea? If bad, why? Thanks public function tmpFile():string { return stream_get_meta_data(tmpfile())['uri']; } EDIT - Does "when the script ends" happen about the same time as the class's destructor?

-

Return $this if static method called on an object

NotionCommotion replied to NotionCommotion's topic in PHP Coding Help

Thanks maxxd, I'll checkout the facade object/pattern, but likely won't use it for this use. Thanks kicken, In hindsight, I agree chaining doesn't bring much value for this case. "If it did" (which it doesn't), guess I could just add another traditional method which would call the static method and directly return $this (but I won't). -

Is it possible to return the instance of an object when a static method is called on it? For instance, (new SomeClass)->echo() returns $this and SomeClass::echo() return a newly created object? Thinking I could do so by making properties also static and then creating the new object and populating it (albeit it will be a clone and not the same object). Thanks <?php class SomeClass { private $services = []; private $paramaters = []; public function Foo():self { echo(__METHOD__); return $this; } public static function echo(string $msg, array $data=[], bool $syslog = false):self|static { echo($msg.($data?(': data: '.json_encode($data)):'').PHP_EOL); return $syslog?self::syslog($msg, $data):$this->getSelfOrStatic(); } public static function syslog(string $msg, array $data=[], bool $echo = false):self|static { syslog(LOG_INFO, $msg.($data?(': data: '.json_encode($data)):'')); return $echo?self::echo($msg, $data):$this->getSelfOrStatic(); } private static function getSelfOrStatic():self|static { return isset($this)?$this:new static(); } }

-

implementing polymorphism and inheritance

NotionCommotion replied to bernardnthiwa's topic in PHP Coding Help

Super/sub type DB modelling provides a more structured approach for inheritance and centralizes the conditional statement logic and IMO provides polymorphism, however, be careful as if taken too far, will likely paint you into a corner that you didn't want to be in, so use sparingly when the benefits outweigh the risks. -

I mostly figured out the SQL error. Appears this was being called by Dockerfile which added a table called user which I had been using, and I didn't realize it was called when the image was being created. Maybe got some technicalities wrong, but changing it got rid of the SQL errors. # api/docker/php/docker-entrypoint.sh #!/bin/sh # bla bla bla... if [ "$( find ./migrations -iname '*.php' -print -quit )" ]; then php bin/console doctrine:migrations:migrate --no-interaction fi if [ "$APP_ENV" != 'prod' ]; then echo "Load fixtures" bin/console hautelook:fixtures:load --no-interaction fi fi fi exec docker-php-entrypoint "$@" Still don't understand the composer part and the not found classes. For instance, I see stof/doctrine-extensions-bundle (v1.7.1) first being installed, but then I get Uncaught Error: Class "Stof\DoctrineExtensionsBundle\StofDoctrineExtensionsBundle" not found error. The only place I see that class being used is in bundles.php, and suppose I could comment it out like I did for libphonenumber\PhoneNumberUtil, but then suspect some other issue will pop up. Not sure where to go next on this... EDIT. Maybe I got lucky! Commented it out and at least PHP is not crashing. Still need to better understand how all this stuff works... facdocs-consumer-1 exited with code 255 facdocs-php-1 | - Installing behat/transliterator (v1.5.0): Extracting archive facdocs-php-1 | - Installing brick/money (0.8.0): Extracting archive facdocs-php-1 | - Installing doctrine/doctrine-fixtures-bundle (3.4.2): Extracting archive facdocs-php-1 | - Installing fig/link-util (1.2.0): Extracting archive facdocs-php-1 | - Installing gesdinet/jwt-refresh-token-bundle (v1.1.1): Extracting archive facdocs-php-1 | - Installing giggsey/locale (2.3): Extracting archive facdocs-php-1 | - Installing laminas/laminas-code (4.8.0): Extracting archive facdocs-php-1 | - Installing symfony/translation (v6.1.11): Extracting archive facdocs-php-1 | - Installing nesbot/carbon (2.66.0): Extracting archive facdocs-php-1 | - Installing nilportugues/sql-query-formatter (v1.2.2): Extracting archive facdocs-php-1 | - Installing notion-commotion/attribute-validator (1.01): Extracting archive facdocs-php-1 | - Installing notion-commotion/attribute-validator-command (1.06): Extracting archive facdocs-php-1 | - Installing symfony/intl (v6.1.11): Extracting archive facdocs-php-1 | - Installing giggsey/libphonenumber-for-php (8.13.6): Extracting archive facdocs-php-1 | - Installing odolbeau/phone-number-bundle (v3.9.1): Extracting archive facdocs-php-1 | - Installing phpstan/phpdoc-parser (1.16.1): Extracting archive facdocs-php-1 | - Installing sebastian/version (3.0.2): Extracting archive facdocs-php-1 | - Installing sebastian/type (3.2.1): Extracting archive facdocs-php-1 | - Installing sebastian/resource-operations (3.0.3): Extracting archive facdocs-php-1 | - Installing sebastian/object-reflector (2.0.4): Extracting archive facdocs-php-1 | - Installing sebastian/object-enumerator (4.0.4): Extracting archive facdocs-php-1 | - Installing sebastian/global-state (5.0.5): Extracting archive facdocs-php-1 | - Installing sebastian/environment (5.1.5): Extracting archive facdocs-php-1 | - Installing sebastian/code-unit (1.0.8): Extracting archive facdocs-php-1 | - Installing sebastian/cli-parser (1.0.1): Extracting archive facdocs-php-1 | - Installing phpunit/php-timer (5.0.3): Extracting archive facdocs-php-1 | - Installing phpunit/php-text-template (2.0.4): Extracting archive facdocs-php-1 | - Installing phpunit/php-invoker (3.1.1): Extracting archive facdocs-php-1 | - Installing phpunit/php-file-iterator (3.0.6): Extracting archive facdocs-php-1 | - Installing theseer/tokenizer (1.2.1): Extracting archive facdocs-php-1 | - Installing sebastian/lines-of-code (1.0.3): Extracting archive facdocs-php-1 | - Installing sebastian/complexity (2.0.2): Extracting archive facdocs-php-1 | - Installing sebastian/code-unit-reverse-lookup (2.0.3): Extracting archive facdocs-php-1 | - Installing phpunit/php-code-coverage (9.2.24): Extracting archive facdocs-php-1 | - Installing phar-io/version (3.2.1): Extracting archive facdocs-php-1 | - Installing phar-io/manifest (2.0.3): Extracting archive facdocs-php-1 | - Installing phpunit/phpunit (9.6.3): Extracting archive facdocs-php-1 | - Installing phpstan/phpstan (1.10.2): Extracting archive facdocs-php-1 | - Installing rector/rector (0.15.18): Extracting archive facdocs-php-1 | - Installing gedmo/doctrine-extensions (v3.11.1): Extracting archive facdocs-php-1 | - Installing stof/doctrine-extensions-bundle (v1.7.1): Extracting archive facdocs-php-1 | - Installing symfony/polyfill-intl-idn (v1.27.0): Extracting archive facdocs-php-1 | - Installing symfony/mime (v6.1.11): Extracting archive facdocs-php-1 | - Installing egulias/email-validator (4.0.1): Extracting archive facdocs-php-1 | - Installing symfony/mailer (v6.1.11): Extracting archive facdocs-php-1 | - Installing symfony/polyfill-intl-icu (v1.27.0): Extracting archive facdocs-php-1 | - Installing friendsofphp/proxy-manager-lts (v1.0.14): Extracting archive facdocs-php-1 | - Installing symfony/proxy-manager-bridge (v6.1.11): Extracting archive facdocs-php-1 | - Installing symfony/polyfill-uuid (v1.27.0): Extracting archive facdocs-php-1 | - Installing symfony/uid (v6.1.11): Extracting archive facdocs-php-1 | - Installing symfony/templating (v6.1.11): Extracting archive facdocs-php-1 | - Installing symfony/form (v6.1.11): Extracting archive facdocs-php-1 | - Installing moneyphp/money (v3.3.3): Extracting archive facdocs-php-1 | - Installing tbbc/money-bundle (5.0.1): Extracting archive facdocs-php-1 | Package webmozart/path-util is abandoned, you should avoid using it. Use symfony/filesystem instead. facdocs-php-1 | Generating optimized autoload files facdocs-consumer-1 | 2023-02-24T18:05:33+00:00 [critical] Uncaught Error: Class "Stof\DoctrineExtensionsBundle\StofDoctrineExtensionsBundle" not found facdocs-consumer-1 | Symfony\Component\ErrorHandler\Error\ClassNotFoundError {#69 facdocs-consumer-1 | #message: """ facdocs-consumer-1 | Attempted to load class "StofDoctrineExtensionsBundle" from namespace "Stof\DoctrineExtensionsBundle".\n facdocs-consumer-1 | Did you forget a "use" statement for another namespace? facdocs-consumer-1 | """ facdocs-consumer-1 | #code: 0 facdocs-consumer-1 | #file: "./vendor/symfony/framework-bundle/Kernel/MicroKernelTrait.php" facdocs-consumer-1 | #line: 135 facdocs-consumer-1 | trace: { facdocs-consumer-1 | ./vendor/symfony/framework-bundle/Kernel/MicroKernelTrait.php:135 { …} facdocs-consumer-1 | ./vendor/symfony/http-kernel/Kernel.php:382 { …} facdocs-consumer-1 | ./vendor/symfony/http-kernel/Kernel.php:766 { …} facdocs-consumer-1 | ./vendor/symfony/http-kernel/Kernel.php:128 { …} facdocs-consumer-1 | ./vendor/symfony/framework-bundle/Console/Application.php:166 { …} facdocs-consumer-1 | ./vendor/symfony/framework-bundle/Console/Application.php:72 { …} facdocs-consumer-1 | ./vendor/symfony/console/Application.php:171 { …} facdocs-consumer-1 | ./vendor/symfony/runtime/Runner/Symfony/ConsoleApplicationRunner.php:54 { …} facdocs-consumer-1 | ./vendor/autoload_runtime.php:29 { …} facdocs-consumer-1 | ./bin/console:11 { facdocs-consumer-1 | › facdocs-consumer-1 | › require_once dirname(__DIR__).'/vendor/autoload_runtime.php'; facdocs-consumer-1 | › facdocs-consumer-1 | arguments: { facdocs-consumer-1 | "/srv/app/vendor/autoload_runtime.php" facdocs-consumer-1 | } facdocs-consumer-1 | } facdocs-consumer-1 | } facdocs-consumer-1 | } facdocs-consumer-1 exited with code 255

-

Thanks maxxd! Not quite production or even close, unfortunately... Current objective is to provide a workableness system to get frontend peps to better understand what they need to do on their end. I probably shouldn't be going down this dockers path as it seems like a blackhole to me, but the main reasons I am doing so is it allows me to better show the frontend people what they need to interface to by "automatically" creating a super-basic client app. I somewhat got things working, but then restarted the docker, and had SQL problems... I know it can't be magic, but not being able to identify what is initiating the script which is causing me grief makes me kind of hate dockers. I've made some progress and see that it is calling a PHP script to initiate things, but even after I comment out what I think are the culprits, still issues... Will try again tomorrow!