-

Posts

64 -

Joined

-

Last visited

Everything posted by guymclarenza

-

RewriteEngine On RewriteCond %{HTTP_HOST} ^XXXXX\.com [NC] RewriteCond %{SERVER_PORT} 80 RewriteRule ^(.*)$ https://XXXXX.com/$1 [R=301,L] RewriteRule ^([^/\.]+).html$ pages.php?furl=$1 [L] .htaccess file The first rule redirects everything to https The second grabs the part of the url between / and .html and creates a variable to use in your code. $ident = $_REQUEST["furl"]; $query = $pdo->prepare("SELECT * FROM pages WHERE page_website = :site AND page_url = :purl ORDER BY page_id DESC LIMIT 0,1"); $query->bindParam(":site", $site); $query->bindParam(":purl", $ident); $query->execute(); php file I hope this helps.

-

I want to run this loop until it has created 50 or a 100 links, max. At present it continues running even though no inputs are being added to the database. I suspect, it keeps looping through and collecting duplicates and then not adding them to the database. I see this as two options, I either limit the number of loops or must find someway for the pages that have already been crawled to be skipped. I am busy doing a php course and the lecturer is not answering questions about how the code could be improved. I figure that if you are going to do something in a training course it should be able to do what it needs to and if it doesn't it needs a little alteration. Right now this keeps looping till a 503 error happens, and then I am not sure if it is not still running server side. In effect it does what I want it to do and all the changes I made have solved a few issues. How do I get it to stop before it crashes? I am thinking limiting the instances of createLink is the solution, but I am at sea here. function followLinks($url) { global $alreadyCrawled; global $crawling; global $hosta; $parser = new DomDocumentParser($url); $linkList = $parser->getLinks(); foreach($linkList as $link) { $href = $link->getAttribute("href"); if((substr($href, 0, 3) !== "../") AND (strpos($href, "imagimedia") === false)) { continue; } else if(strpos($href, "#") !== false) { continue; } else if(substr($href, 0, 11) == "javascript:") { continue; } $href = createLink($href, $url); if(!in_array($href, $alreadyCrawled)) { $alreadyCrawled[] = $href; $crawling[] = $href; getDetails($href); } } array_shift($crawling); foreach($crawling as $site) { followLinks($site); }

-

I have a field gmap the data would be a variation of this, the gmap data to place the map on the site. The rest of the string is in my code because I want to size the iframe etc. Whean I use an input form to update this field using Null data and using a simple text line like yellow, the script works, when I try and add data as below I get a 403 error, how can I resolve this? https://www.google.com/maps/embed?pb=!1m18!1m12!1m3!1d3573.197380400117!2d28.465316315605516!3d-26.41710627907957!2m3!1f0!2f0!3f0!3m2!1i1024!2i768!4f13.1!3m3!1m2!1s0x1e94d9c942963de9%3A0xc560ec1cd5d52b74!2sArrie%20Nel%20Nigel%20Pharmacy!5e0!3m2!1sen!2sza!4v1603786030983!5m2!1sen!2sza> Code Should I str_replace all the ! with asccii? or something else?

-

if($pid != "") { $bname = $_REQUEST['bname']; $btitle = $_REQUEST['btitle']; $btags = $_REQUEST['btags']; $bdesc = $_REQUEST['bdesc']; $btext = $_REQUEST['btext']; $bimg = $_REQUEST['bimg']; $bimgalt = $_REQUEST['bimgalt']; $data = ""; if($bname!="") { $data = $data." 'bname' => ".$bname.", "; } if($btitle!="") { $data = $data."'btitle' => ".$btitle.", "; } if($btags!="") { $data = $data."'btags' => ".$btags.", "; } if($bdesc!="") { $data = $data."'bdesc' => ".$bdesc.", "; } if($btext!="") { $data = $data."'btext' => ".$btext.", "; } if($bimg!="") { $data = $data."'bimg' => ".$bimg.", "; } if($bimgalt!="") { $data = $data."'bimgalt' => ".$bimgalt.", "; } $data = $data."'pid ' =>". $pid.", " ; $data = "[".$data."]"; $build = ""; if($bname!="") { $build = $build." page_name = :bname,"; } if($btitle!="") { $build = $build." page_title = :btitle,"; } if($btags!="") { $build = $build." page_tags = :btags,"; } if($bdesc!="") { $build = $build." page_desc = :bdesc,"; } if($btext!="") { $build = $build." page_text = :btext,"; } if($bimg!="") { $build = $build." page_img = :bimg,"; } if($bimgalt!="") { $build = $build." page_imgalt = :bimgalt,"; } $build = $build." page_id = :pid"; $sql = "UPDATE pages SET ".$build." WHERE page_id=:pid"; echo $sql."<br /><br />"; echo $data."<br /><br />"; $stmt= $pdo->prepare($sql); $stmt->execute($data); Result of echo SQL and Data UPDATE pages SET page_name = :bname, page_title = :btitle, page_tags = :btags, page_desc = :bdesc, page_text = :btext, page_img = :bimg, page_imgalt = :bimgalt, page_id = :pid WHERE page_id=:pid [ 'bname' => Home, 'btitle' => Market Net, 'btags' => full stack development, search engine ready, professional web development, web design, 'bdesc' => Market net gives you online access to market traders country wide, Support Local businesses today, 'btext' => Market Net is the place for you to find those goodies you saw on the markets, buy them online and support small local businesses. Watch this space for more information. Test #D hvac , 'bimg' => supportlocal.jpg, 'bimgalt' => Support Local Small Businesses, 'pid ' =>106, ] Yet I am getting said error message. What am I doing wrong?

-

It may be an urban myth but over the years I have found that I get better results with keyword rich URLs. 1.html will never be as good as keyword.html. Also very long URLs don't work as well as concise ones. a_keyword_rich_phrase_with_a_description.html will under perform keyword_rich.html. This is in my experience and has no scientific data to support it. Content is way more important than this, Good content will always perform better than poor content no matter what naming convention you use.

-

Google will redirect as per your htaccess and start pointing people at the new link once they have crawled the site and found the redirects. You should not be punished for making your pages more structured, if anything you should get plus points.

-

I do this all the time. I don't like using the same code on every page, when I have duplication I use an include file, first started doing this in shtml. I don't see any speed issues on my sites. I have been coding for a while, but am not a great coder, someone with more experience may differ. If you want to see it in action, check out The site I am busy working on. I use very simple CSS on this one, you are welcome to view source and borrow. include ("../navigation/constants.php"); SQL here to get data //build metatags $indTags = "<title>$title </title>\n"; $indTags .= "<meta name='description' content='$metadesc' />\n"; $indTags .= "<link rel='canonical' href='$canon' />\n"; $indTags .= "<meta property='og:title' content='$title' />\n"; $indTags .= "<meta property='og:type' content='website' />\n"; $indTags .= "<meta property='og:url' content='$canon' />\n"; $indTags .= "<meta name='keywords' content='$tags' />\n"; $indTags .= "<meta property='og:image;' content='$ogimg' />\n"; } $indTags .= "<meta http-equiv='Content-Type' content='text/html; charset=utf-8' />\n<meta name='viewport' content='width=device-width, initial-scale=1.0'>\n\n"; //lots of includes include ("../navigation/head.php"); include ("../css/style.php"); include ("../navigation/seman.php"); include ("../navigation/top.php");

-

This is a pain in the bottom, everything should do as is.

guymclarenza replied to guymclarenza's topic in PHP Coding Help

Thank you, I knew it would be something obvious. -

PHP problem when no records from database

guymclarenza replied to JoshEir's topic in PHP Coding Help

I have been playing with it on and off for almost 20 years and I still need to learn new stuff every time I try and do something. I am doing silly courses like building a search engine just to see what the code does. -

I feel your pain, the only advice I have is We all know the saying is if a job is worth doing it is worth doing well. I used to think like that till I started thinking about this, I am not suggesting that you should do shoddy work, I am saying get out and do the job. You may not have the skills or the knowledge to do it perfectly, yet the satisfaction of getting it done will exceed the concern about it not being perfect. Too many projects die a slow death because people want perfection. MS DOS and Windows would still be an idea if the job wasn't worth doing badly. Get the job done. Improve it and improve your skills and knowledge so that the job done badly can be made better after it's real.

-

I have been staring at this code for hours trying to figure out what is wrong, I guarantee that someone is going to look at it and make me feel like a tool. The first query is not going through the list, the second is. It worked till I started updating to PDO. I have tested the SQL, that works, probably a flipping comma or a quotation mark screwing with my brain. Please look and spot the obvious balls up and point it out to me. as in I get a result that looks like this Heading question question question and it stops. I am expecting Heading question question question Heading question question question Why is this failing? Please show a doddering old fool what I am doing wrong... $query = $pdo->prepare("SELECT * FROM faq_cats WHERE faqc_site = :site ORDER BY faqc_id ASC LIMIT 0,4"); $query->bindParam(":site", $site); $query->execute(); while($row = $query->fetch(PDO::FETCH_ASSOC)) { $fid = $row["faqc_id"]; $faqc = $row["faqc_name"]; echo "<h2> ".$faqc." </h2>"; $query = $pdo->prepare("SELECT * FROM faqs WHERE faq_cat = :fid ORDER BY faq_id ASC"); $query->bindParam(":fid", $fid); $query->execute(); while($row1 = $query->fetch(PDO::FETCH_ASSOC)) { $faid=$row1["faq_id"]; $faqn=$row1["faq_question"]; echo "<a href=\"#".$faid."\">".$faqn."</a><br />"; } }

-

This worked till I added some code, Now it does not,

guymclarenza replied to guymclarenza's topic in PHP Coding Help

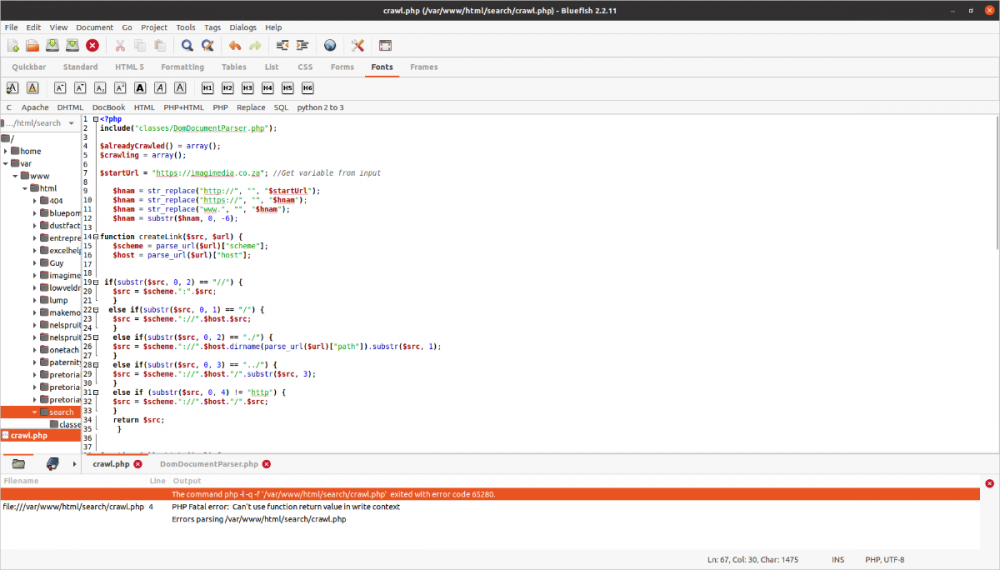

The error codes are on the bottom of the image. Oh FFS now that you mention it, what a twat I am() Thanks for pointing out the wood I couldn't see for the trees -

<?php include("classes/DomDocumentParser.php"); //this could be the problem it appears in the error file $alreadyCrawled() = array(); $crawling = array(); $startUrl = "https://imagimedia.co.za"; //Get variable from input $hnam = str_replace("http://", "", "$startUrl"); $hnam = str_replace("https://", "", "$hnam"); $hnam = str_replace("www.", "", "$hnam"); $hnam = substr($hnam, 0, -6); function createLink($src, $url) { $scheme = parse_url($url)["scheme"]; $host = parse_url($url)["host"]; if(substr($src, 0, 2) == "//") { $src = $scheme.":".$src; } else if(substr($src, 0, 1) == "/") { $src = $scheme."://".$host.$src; } else if(substr($src, 0, 2) == "./") { $src = $scheme."://".$host.dirname(parse_url($url)["path"]).substr($src, 1); } else if(substr($src, 0, 3) == "../") { $src = $scheme."://".$host."/".substr($src, 3); } else if (substr($src, 0, 4) != "http") { $src = $scheme."://".$host."/".$src; } return $src; } function followLinks($url) { global $hnam; global $alreadyCrawled; global $crawling; $parser = new DomDocumentParser($url); $linkList = $parser->getLinks(); foreach($linkList as $link) { $href = $link->getAttribute("href"); if(strpos($href, "#") !== false) { continue; } else if(substr($href, 0, 11) == "javascript:") { continue; } $href = createLink($href, $url); if(strpos($href, "$hnam") == false) { continue; } //this could be the problem if(!in_array($href, $alreadyCrawled)) { $alreadyCrawled[] = $href; $crawling[] = $href; //insert $href } echo $href."<br />"; } array_shift($crawling); foreach($crawling as $site) { followLinks($site); } } followLinks($startUrl); ?> include file <?php class DomDocumentParser { private $doc; public function __construct($url) { $options = array( 'http'=>array('method'=>"GET", 'header'=>"User-Agent: imagimediaBot/0.1\n") ); $context = stream_context_create($options); $this->doc = new DomDocument(); @$this->doc->loadHTML(file_get_contents($url, false, $context)); } public function getLinks() { return $this->doc->getElementsByTagName("a"); } } ?>