-

Posts

24,615 -

Joined

-

Last visited

-

Days Won

835

Everything posted by Barand

-

-

Use SQL Query to Get Revised & New Orders

Barand replied to mongoose00318's topic in PHP Coding Help

I would recommend you split the order number in that case. Put the location (digits 1 and 2) in a location code column and the rest in the order number column. Aside from it being a poor design decision to have "structured" numbers in a single column, your users will have to use search expressions "LIKE '%x%' ". That will prevent any indexes from being used and greatly slow down any searches. -

Use SQL Query to Get Revised & New Orders

Barand replied to mongoose00318's topic in PHP Coding Help

Why? There is very little data in your query results that is LIKEable. LIKE is for matching string type columns against partial string values using wildcards E.G. Find all users with last name beginning with "J" who live in a location ending with "ham" SELECT … FROM user WHERE lastname LIKE 'j%' AND location LIKE '%ham'; Jones, Oldham Johnstone, Birmingham Joker, Gotham -

Combobox show information selected from database

Barand replied to ciresuark's topic in PHP Coding Help

The value should be the country code (which is what is in your customer table) -

Combobox show information selected from database

Barand replied to ciresuark's topic in PHP Coding Help

Is line 94 this one.. <option value="<?php echo $country['customerID']; ?> where you are trying to use "customer_id" as the value for your country, instead of the country_id/code? -

Combobox show information selected from database

Barand replied to ciresuark's topic in PHP Coding Help

Of course - all the options are in the combo but the relevant one is displayed. -

Combobox show information selected from database

Barand replied to ciresuark's topic in PHP Coding Help

Build you country combo options from the country table. The value for each option should be the id/code for the country Here's an example <?php $res = $db->query("SELECT customer_id , fname , lname , country FROM customer LIMIT 10 "); $tdata = ''; foreach ($res as $row) { $tdata .= "<tr> <td>{$row['fname']} {$row['lname']}</td> <td style='text-align: center;'><select name='country'>" . countryOptions($db, $row['country']) . "</select><td> </tr>"; } function countryOptions(PDO $db, $current) { $opts = "<option value=''>- select country -</option>\n"; $res = $db->query("SELECT country_id as id , country_name as name FROM country ORDER BY name "); foreach ($res as $row) { $sel = $row['id'] == $current ? 'selected' : ''; $opts .= "<option $sel value='{$row['id']}'>{$row['name']}</option>\n"; } return $opts; } ?> <!DOCTYPE html> <html lang="en"> <head> <meta http-equiv="Content-Type" content="text/html; charset=utf-8"> <title>Example</title> </head> <body> <table style='width: 600px;'> <tr><th>Name</th><th>Country</th></tr> <?=$tdata?> </table> </body> </html> Example data and ouput Table: customer Table: country +-------------+----------+-----------+---------+ +------------+--------------+ | customer_id | fname | lname | country | | country_id | country_name | +-------------+----------+-----------+---------+ +------------+--------------+ | 1 | Wanda | Denning | 1 | | 1 | England | | 2 | Tom | Westbrook | 2 | | 2 | Scotland | | 3 | Georgina | Jones | 3 | | 3 | Wales | | 4 | Hannah | Wentworth | 1 | | 4 | N. Ireland | | 5 | Roy | Egon | 4 | +------------+--------------+ | 6 | Len | Elridge | 1 | | 7 | Sam | Patterson | 2 | | 8 | Charles | Knight | 1 | | 9 | Gerald | Watts | 1 | | 10 | Tom | Bowles | 4 | +-------------+----------+-----------+---------+ -

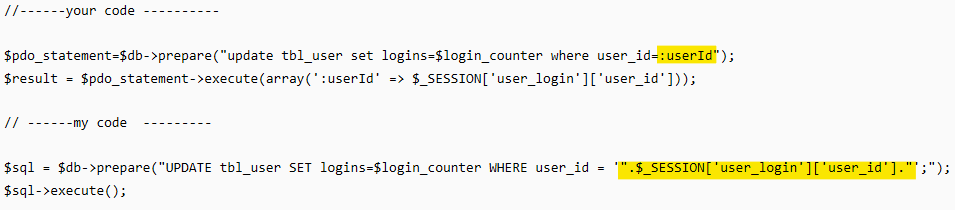

At the risk of stating the bleedin' obvious, the first set of code above uses a placeholder (:userId) and passes the user id value in the execute() call. This is the correct way to use prepare(). The second puts the user id value directly into the query string. BTW, you stored the user id in $_SESSION[''user_login'] and not in $_SESSION['user_login']['user_id'].

-

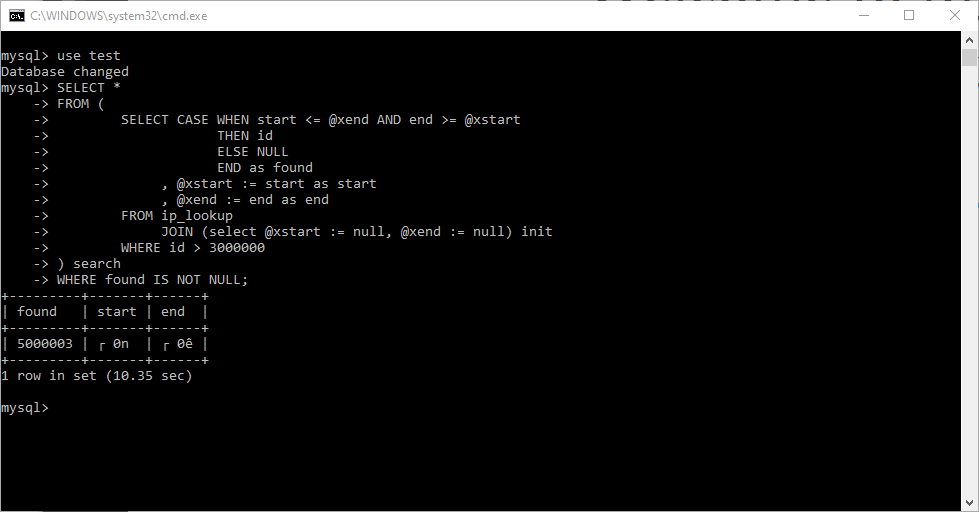

I had to check my table in 2 halves (id < 3,000,000 and id > 3,000,000) otherwise it timed out. mysql> SELECT count(*) FROM ip_lookup; +----------+ | count(*) | +----------+ | 5852250 | +----------+ 1 row in set (10.15 sec) mysql> SELECT * -> FROM ( -> SELECT CASE WHEN start <= @xend AND end >= @xstart -> THEN id -> ELSE NULL -> END as found -> , @xstart := start as start -> , @xend := end as end -> FROM ip_lookup -> JOIN (select @xstart := null, @xend := null) init -> WHERE id > 3000000 -> ) search -> WHERE found IS NOT NULL; +---------+-------+------+ | found | start | end | +---------+-------+------+ | 5000003 | ┌ 0n | ┌ 0ê | +---------+-------+------+ 1 row in set (27.00 sec) SELECT id , inet6_ntoa(start) as startA , inet6_ntoa(end) as endA FROM ip_lookup a WHERE id BETWEEN 5000001 AND 5000005; +---------+----------------+----------------+ | id | startA | endA | +---------+----------------+----------------+ | 5000001 | 218.255.48.86 | 218.255.48.102 | | 5000002 | 218.255.48.103 | 218.255.48.119 | << overlapping | 5000003 | 218.255.48.110 | 218.255.48.136 | << | 5000004 | 218.255.48.137 | 218.255.48.153 | | 5000005 | 218.255.48.154 | 218.255.48.170 | +---------+----------------+----------------+

-

1 ) That isn't how you did it in the first query. 2 ) It's wrong. 3 ) Never put variables into a query. That's why "prepare" is used.

-

The same way that you did with the username and email in the first query.

-

That will treat the symptom but the cure would be to fix the ip_lookup records that have the overlapping start and end values.

-

That is one of the prime reasons why many developers prefer PDO to mysqli. With PDO it doesn't matter whether you use query() or prepare(). The resulting objects in both cases have exactly the same methods. With mysqli there are two distinct sets of methods that you must use to process results depending on whether you used query (which gives a result object) or prepare (which gives a statement object).

-

You seem to be confusing your types. The string version of an IPv6 address ( xxxx:xxxx:xxxx:xxxx:xxxx:xxxx:xxxx:xxxx )requires varchar(40). Well, 39 to be precise. When converted to a numeric value with inet6_aton() then the output is varbinary(16).

-

I suspect it may be your use of inet_aton() and trying to match that against varbinary(16) but I don't know how your tables are currently defined or how the llokup start and end values were set (Mine were set using inet6_aton() to handlw both IPv4 and IPv6). My tables: CREATE TABLE `download` ( `id` int(16) NOT NULL AUTO_INCREMENT, `address` varchar(40) DEFAULT NULL, `ip_address` varbinary(16) DEFAULT NULL, `ref` int(25) unsigned DEFAULT NULL, PRIMARY KEY (`ID`) ) ENGINE=InnoDB DEFAULT CHARSET=utf8; (No data yet) CREATE TABLE `ip_lookup` ( `id` int(11) NOT NULL AUTO_INCREMENT, `start` varbinary(16) DEFAULT NULL, `end` varbinary(16) DEFAULT NULL, PRIMARY KEY (`id`) ) ENGINE=InnoDB DEFAULT CHARSET=utf8; +----+----------+----------+--------------+--------------+ | id | start | end | ip_start | ip_end | +----+----------+----------+--------------+--------------+ | 1 | FF010101 | FF320117 | 255.1.1.1 | 255.50.1.23 | | 2 | FF320118 | FF320164 | 255.50.1.24 | 255.50.1.100 | | 3 | FF320165 | FF3201C8 | 255.50.1.101 | 255.50.1.200 | | 4 | FF3201C9 | FF3204FF | 255.50.1.201 | 255.50.4.255 | +----+----------+----------+--------------+--------------+ My trigger: CREATE TRIGGER `download_BEFORE_INSERT` BEFORE INSERT ON `download` FOR EACH ROW BEGIN SET NEW.ip_address = inet6_aton(NEW.address), NEW.ref = (SELECT id FROM ip_lookup i WHERE NEW.ip_address BETWEEN i.start AND i.end ); END Add download data INSERT INTO download (address) VALUES ('255.50.1.20'), ('255.50.1.165'), ('255.50.1.100'), ('255.50.1.222'); View results: (as you can see - the trigger worked) SELECT d.id , d.address , hex(d.ip_address) as hex_address , d.ref , inet6_ntoa(i.start) as lookup_start , inet6_ntoa(i.end) as look_up_end FROM download d JOIN ip_lookup i ON d.ref = i.id; +----+--------------+-------------+------+--------------+--------------+ | id | address | hex_address | ref | lookup_start | look_up_end | +----+--------------+-------------+------+--------------+--------------+ | 1 | 255.50.1.20 | FF320114 | 1 | 255.1.1.1 | 255.50.1.23 | | 3 | 255.50.1.100 | FF320164 | 2 | 255.50.1.24 | 255.50.1.100 | | 2 | 255.50.1.165 | FF3201A5 | 3 | 255.50.1.101 | 255.50.1.200 | | 4 | 255.50.1.222 | FF3201DE | 4 | 255.50.1.201 | 255.50.4.255 | +----+--------------+-------------+------+--------------+--------------+

-

How to get results of two players stats (home and away)

Barand replied to Newbeeeee's topic in MySQL Help

Whereas that may be a suitable format for a spreadsheet table it is not fit for purpose in a relational database. Further, your choices of column type are totally unsuitable for the data in them. But as you won't be here much longer, that's all you'll get. -

Build your query string with http_build_query() $vals = [ 'id' => 123, 'name' => "Don't do that!" ]; $qstr = http_build_query($vals);

-

The $stmt variable is set only if $_GET['customerID'] exists. However you attempt to use it later in the code. As you are querying for a specific user and will get only a single record, why are you using a loop to process it? You should be using prepared statements and not putting values directly into your SQL statements.

-

Accumulate the total while you are processing the records $total_mass = 0; while($row_total_mass = mysqli_fetch_assoc($result_total_mass)) { echo $row_total_mass['sum'].'<br />'; $total_mass += $row_total_mass['sum']; } echo 'Total: ' . $total_mass;

-

Store part of array when array_search is successful

Barand replied to mongoose00318's topic in PHP Coding Help

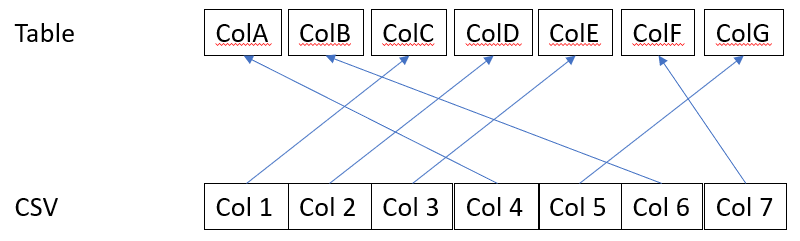

If you have something like this Then the columns section of the LOAD DATA statement would be (ColC, ColD, ColE, ColA, ColG, ColB, ColF) -

Store part of array when array_search is successful

Barand replied to mongoose00318's topic in PHP Coding Help

Yes it does - it defines the destination column for each column in the csv. -

An AJAX script returns everything in it's response that a normal script would send to the browser. Try calling ajax.php as a non-ajax request (in you browser as you would any other php script). What you see is what it would return in its response. Not when it comes to function names.

-

Store part of array when array_search is successful

Barand replied to mongoose00318's topic in PHP Coding Help

Either you haven't looked very hard or you don't rate the above example as "good" (or both) Of course, there is always the reference manual (https://dev.mysql.com/doc/refman/5.7/en/load-data.html) The final section of the above LOAD DATA statement defines just that. (@dummy, @dummy, enterprise, part_num, desc, qty, line_item, job_num, work_order, psm, date_change_flag, scheduled_ship_date, on_hold, on_hold_reason, total_hours, worfc) The first two columns are not used, hence put into dummy variables. The others define the column in the table that that column in the CSV should go. -

1 ) This is an English-speaking forum. 2 ) You have already posted this same question before and had replies. Don't double-post.

-

As you aren't checking for MySQL errors, put this line just before you connect with mysqli_connect() mysqli_report(MYSQLI_REPORT_ERROR|MYSQLI_REPORT_STRICT); That will check for errors for you.